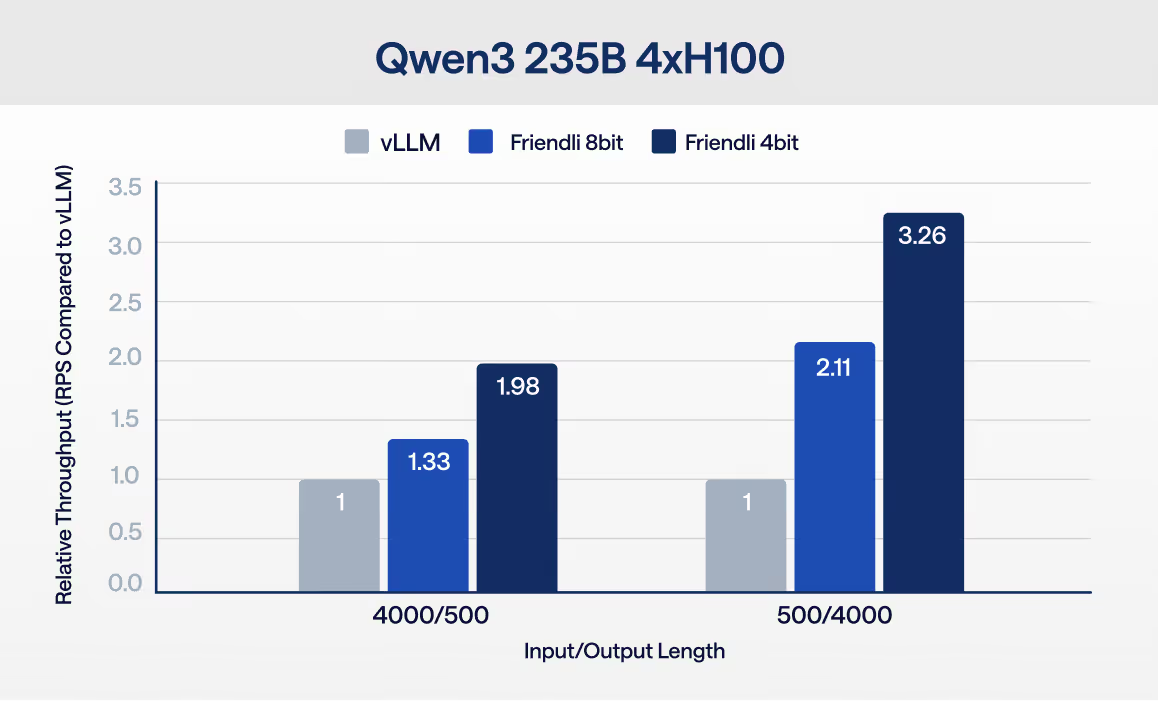

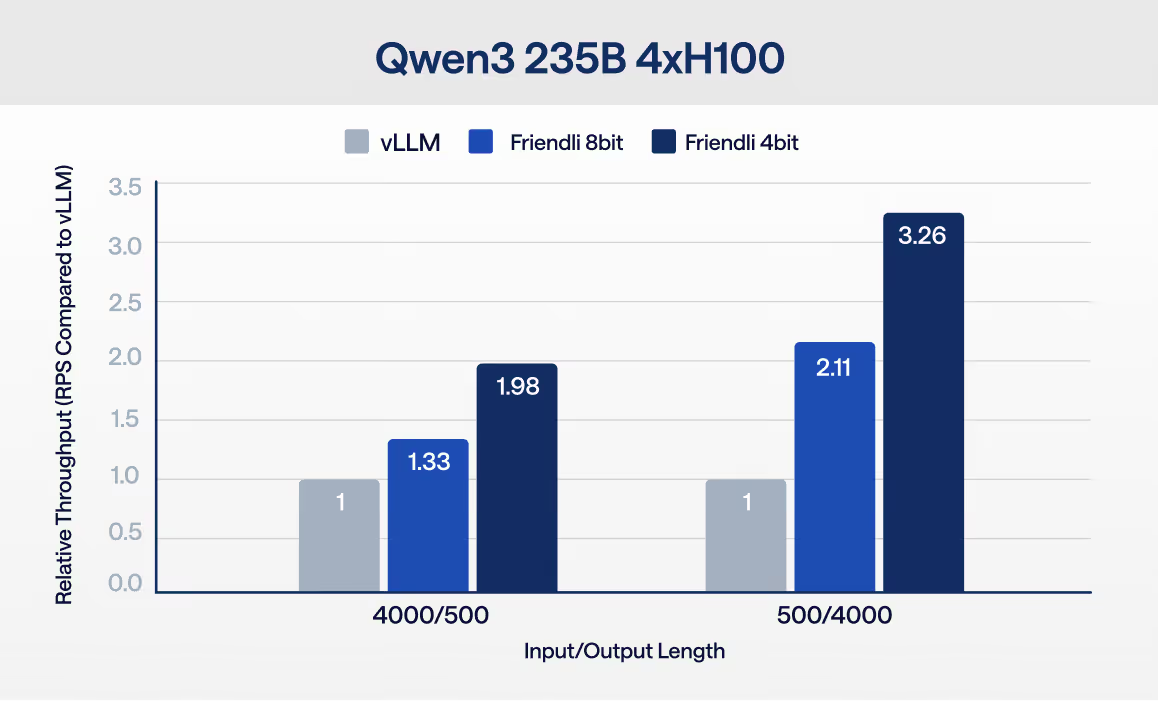

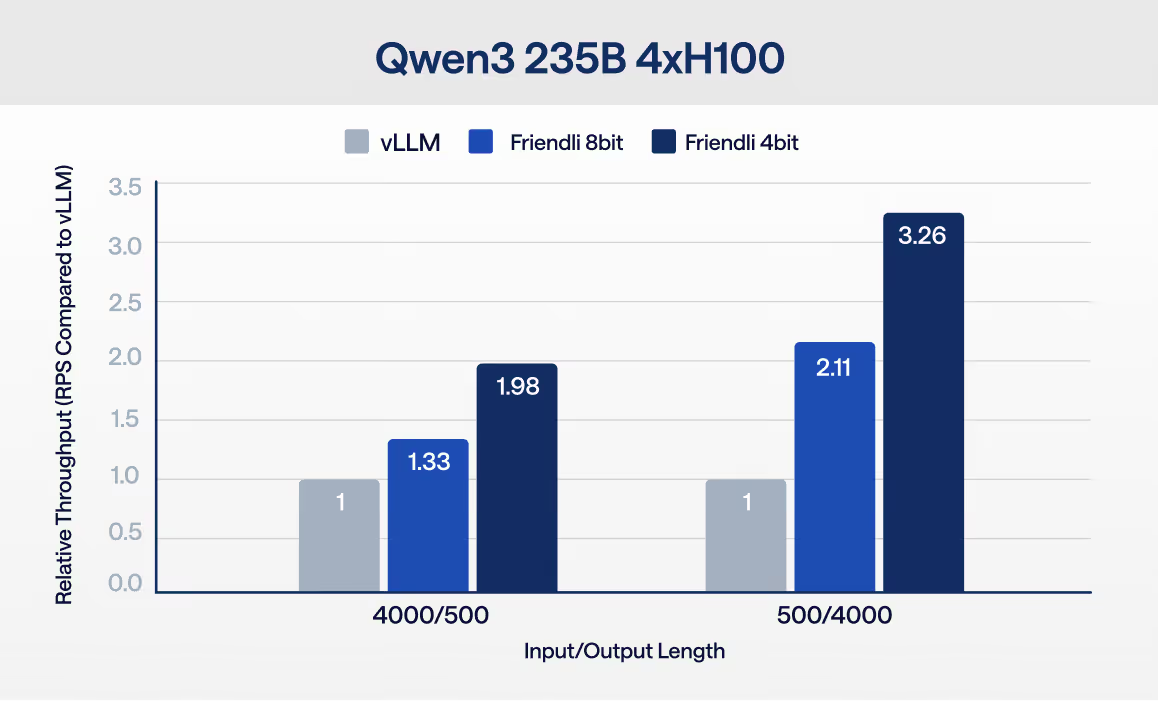

Already running open models on Fireworks AI, Together AI, Google Vertex AI, or Baseten? Switch your inference infrastructure to FriendliAI for 99.99% reliability, 3x throughput, and up to 90% cost savings—with minimal changes to your stack.

Many teams already run open-source models—then hit bottlenecks as traffic grows: latency variance, throughput ceilings, and scaling overhead. FriendliAI is an inference platform that helps teams switch to open models with lower latency, higher throughput, and up to 90% lower inference costs without changing their application.

First

Submit the form with your details and current provider bill

Second

We review and approve your credit amount

Third

Start running inference on FriendliAI using your credits

Get up to $50,000 in inference credits when you move to FriendliAI.

Credits subject to review and approval. Offer available for a limited time.